Understanding the crawlability of a website and the crawl budget.

Crawlability is a fundamental aspect of technical SEO and refers to the ability of search engines to crawl the pages of a website and understand their content. The crawl budget includes the number of pages that a search engine crawler can crawl on a website within a certain timeframe. Good crawlability is crucial for search engines to find and index all important pages of a website, thereby making the best possible use of the available crawl budget.

In this article, I will explain what crawlability and crawl budget mean for your website, why they are important, and which factors influence them.

Definition of Crawlability

Crawlability describes the ability of search engine crawlers (also known as bots or spiders) to access, browse, and process the content of a website. A search engine crawler is an automated program that searches the internet to discover and index web pages. Some of the most well-known crawlers include Googlebot, Bingbot, and Yahoo Slurp.

Why is crawlability important?

Only pages that can be crawled by search engines are indexed and can therefore appear in search results. If important pages cannot be crawled, they lose the opportunity to rank well in search results, leading to a loss of visibility and traffic.

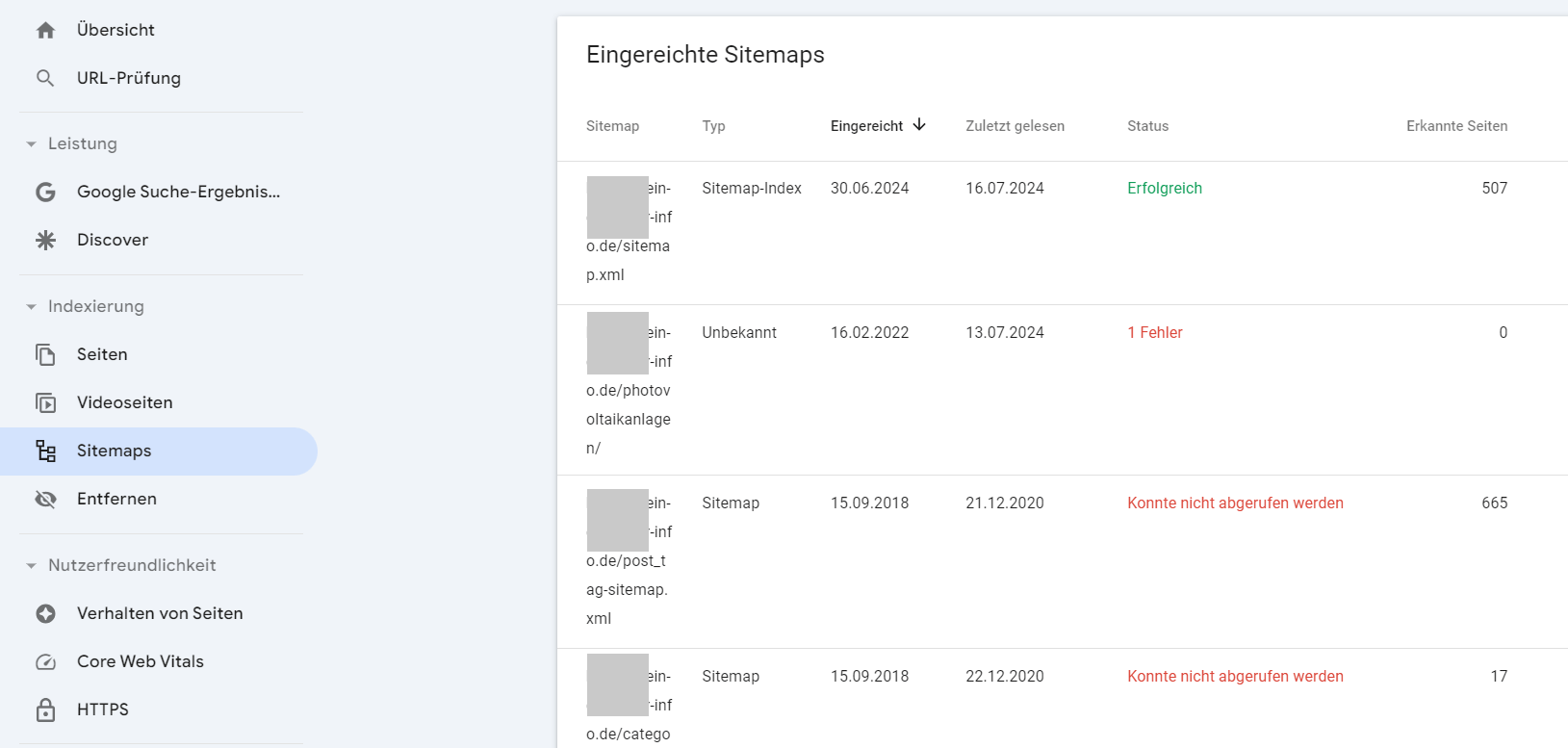

In this example, a customer uploaded sitemaps themselves to Google Search Console and made some mistakes. Only after years and an SEO audit by us was the sitemap added in the correct manner.

Factors that influence crawlability

- An XML sitemap is a file that lists all the important pages of a website and helps search engines find these pages. It is particularly useful for large websites or those with complex structures.

- The robots.txt file gives search engines instructions on which areas of the website they are allowed to crawl and which ones they are not. A misconfigured robots.txt file can inadvertently exclude important pages from being crawled.

- A clear and logical URL structure makes it easier for search engines to understand the relationships between different pages. Short, descriptive URLs are beneficial in this regard.

- A well-thought-out internal linking structure helps search engine crawlers discover all pages of a website and understand their significance. Pages that are deeply hidden within the website’s structure and have few internal links can be hard to find.

- Slow server response times can prevent search engine crawlers from crawling all pages of a website. Therefore, fast and reliable server performance is important for good crawlability.

- Error pages (e.g., 404 errors) and poorly configured redirects can hinder the crawl process. It is important to conduct regular checks and ensure that all links on the website are working.

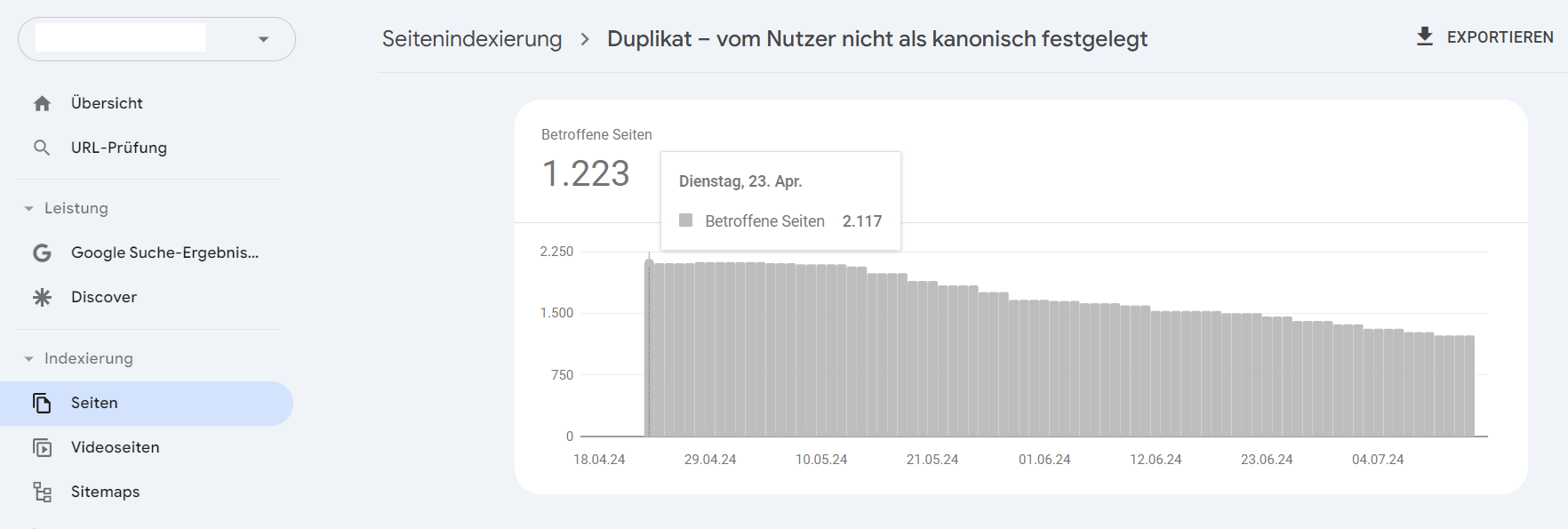

- Duplicate content can confuse search engines and lead them to not know which version of a page to index. The use of canonical tags can help solve this problem.

The Search Console also points out issues with duplicate content without canonical tags. Here, the client is gradually dismantling the pages after they were uncovered through an SEO audit by us.

The crawl budget and its significance for crawlability

The crawl budget is an important term in the field of technical SEO, referring to the number of pages that a search engine crawler visits on a website within a certain timeframe. It is directly related to a website’s crawlability, as efficient management of the crawl budget ensures that search engine crawlers can find and index the most important pages of a website.

The crawl budget consists of two main components:

- Crawl Rate Limit (Crawl Rate Limit): This is the number of requests a search engine crawler can send to a website without affecting server performance. Google automatically adjusts this rate to ensure that the server is not overloaded.

- Crawl Demand: This depends on the popularity and relevance of the pages. Frequently updated or particularly relevant pages have a higher crawl demand and are crawled more often.

Why is the crawl budget important?

Efficient management of the crawl budget is crucial because search engines crawl a limited number of pages per website within a given time frame. This is particularly important for large websites or websites with frequent updates, where prioritizing the most relevant pages is essential. An inefficient crawl budget can result in important pages not being crawled or indexed, which negatively affects visibility in search engines.

The above project already has over 1,200 duplicate pages that unnecessarily consume the crawl budget. Even worse is the impact of the 404 pages that the Search Console records. With such a high number, it quickly becomes clear that many irrelevant pages are being crawled, leading to a very inefficient use of the crawl budget. See here:

Relationship between crawl budget and crawlability

There are two very practical approaches to improve both crawlability and crawl budget:

1. Optimization of crawlability to maximize the crawl budget by avoiding duplicate content and improving internal linking.

Duplicate content wastes the crawl budget, as crawlers sift through the same content multiple times. By using canonical tags and avoiding redundant pages, the crawl budget can be utilized more efficiently. A well-structured internal linking helps search engine crawlers to quickly find and crawl the most important pages. This ensures that the crawl budget is not wasted on unimportant or hard-to-access pages. Technical errors such as 404 pages or slow loading times can hinder crawling and inefficiently use the crawl budget. Therefore, regular checks and optimizations of website performance are crucial for your success.

2. Efficient management of the crawl budget to improve crawlability

XML sitemaps help search engines find and prioritize the most important pages of a website. This contributes to efficient use of the crawl budget and ensures that the most important content is crawled. By properly configuring the robots.txt file, unnecessary pages can be excluded from crawling, allowing the crawl budget to focus on relevant pages. Additionally, regular updates and merging similar pages improve the relevance and recency of content, which in turn increases crawl demand and makes more efficient use of the crawl budget.

Conduct regular checks to identify and fix crawling obstacles such as 404 errors, slow loading times, and other technical issues.

Server response times, website performance, and their relationship with crawl budget.

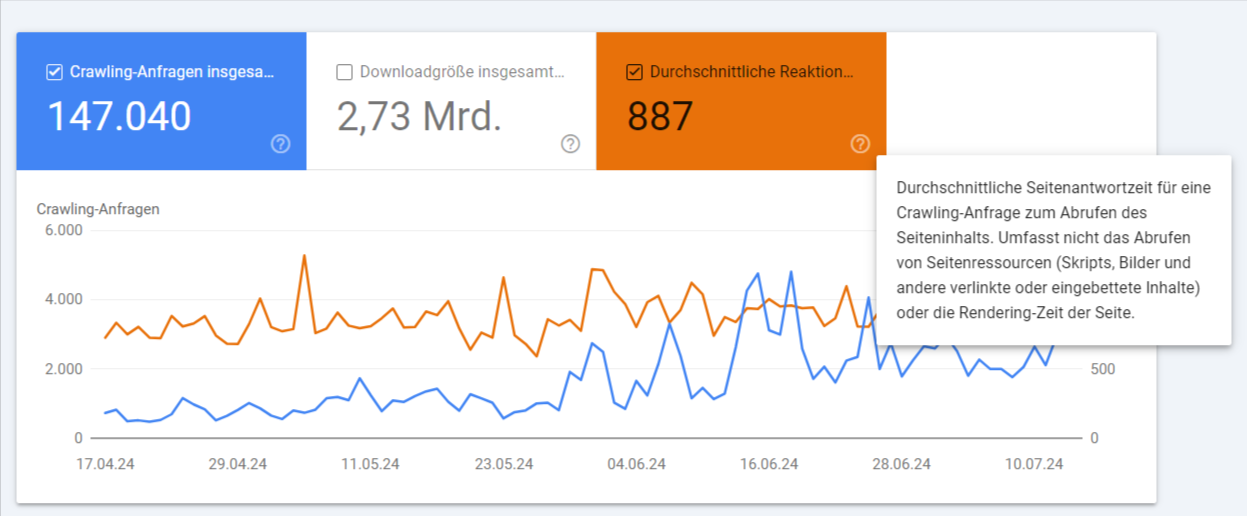

The server response times and the performance of a website have a direct impact on the crawl budget. A slow website can negatively affect the crawl budget, thereby reducing the efficiency of indexing by search engines.

The server response time is the time a web server takes to respond to a request from a user or search engine crawler. It is an important indicator of a website’s performance and can affect the crawl rate.

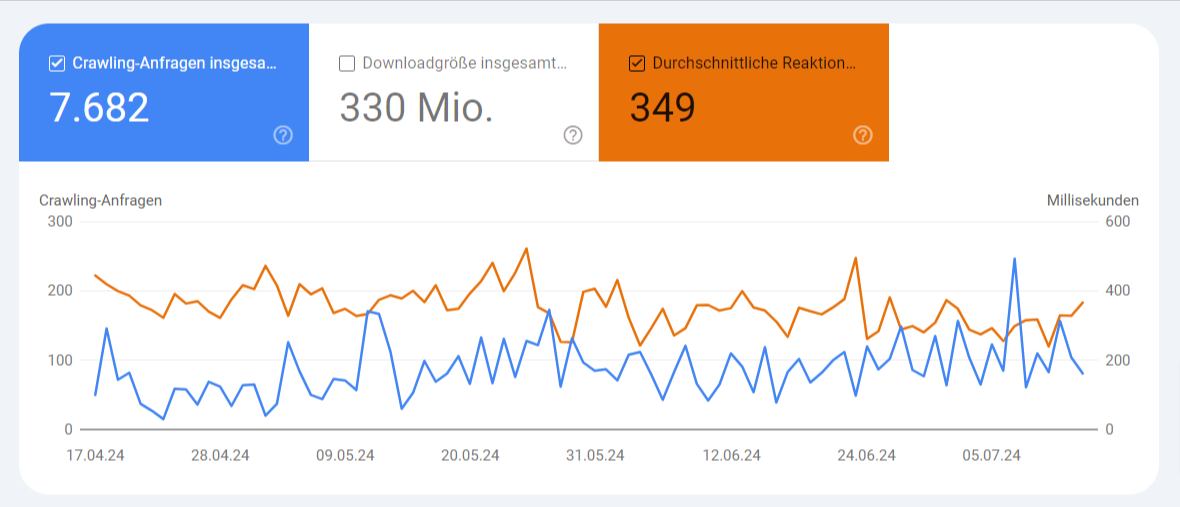

You can find information about your average server response time in the Google Search Console under Settings => Crawl Statistics. Here is an example with a very good average server response time:

What influence do server response times have on crawl budget?

- If a website has slow server response times, search engine crawlers take more time to crawl each page. This can lead to fewer pages being crawled within a certain time frame, as your crawl budget is limited.

- Google dynamically adjusts the crawl rate based on server response times. If the server responses are slow, Google reduces the number of requests to avoid impacting server performance. This means that fewer pages are crawled, making the crawl budget inefficient. Prioritization of fast websites: Search engines favor websites with fast loading times and quick server response times. A slow website may therefore receive a lower priority for crawling, negatively impacting the crawl budget.

- The overall performance of a website includes several aspects, such as loading speed, Time to First Byte (TTFB), and overall user-friendliness. A well-optimized website not only provides a better user experience but also allows for more efficient crawling.

So what can you do? Of course, improve loading times and reduce server load! Websites that load quickly allow search engine crawlers to crawl more pages in a shorter amount of time. This maximizes the crawl budget and ensures that important pages are crawled and indexed. A well-optimized website reduces server load and ensures that search engine crawlers can work efficiently without affecting server performance. This leads to better utilization of the crawl budget.

A fast and smooth user experience increases user dwell time and reduces the bounce rate. Search engines take these factors into account when assessing the relevance and quality of a website, which can positively impact its ranking.

Google itself states in its help article about crawl budget: If the website responds very quickly for a while, the limit is increased so that more connections can be used for crawling. If the website slows down or responds with server errors, the limit decreases and the Googlebot crawls less.

Here is another screenshot from a different project. The server response time value is over 800. This is almost an unfortunate norm, based on our observation. Many projects hardly manage to achieve average values below 500. Our personally achieved low point was 249 with TutKit.com, although we know of another Shopify project that managed to beat that value. Respect!

If you notice a relatively high value in server response times, you can take some practical measures to optimize server response times and website performance:

- A CDN distributes the load of content delivery across multiple servers worldwide, which reduces loading times and improves server response times. This is particularly useful for multilingual websites with international visitors.

- Compress and optimize your images and other media content to reduce loading times. Especially use modern image formats for the web like AVIF or WebP.

- Implement browser caching and server-side caching to reduce repeated requests and increase loading speed.

- Reduce the number of HTTP requests by combining CSS and JavaScript files and removing unnecessary plugins.

- Minimize the use of third-party scripts that can negatively impact the website’s loading time.

- Use tools like Google PageSpeed Insights, Lighthouse, and WebPageTest to regularly monitor the performance of your website and identify optimization potentials.

- Make sure your server has sufficient resources and is regularly maintained. Use modern web server technologies like NGINX or HTTP/2.

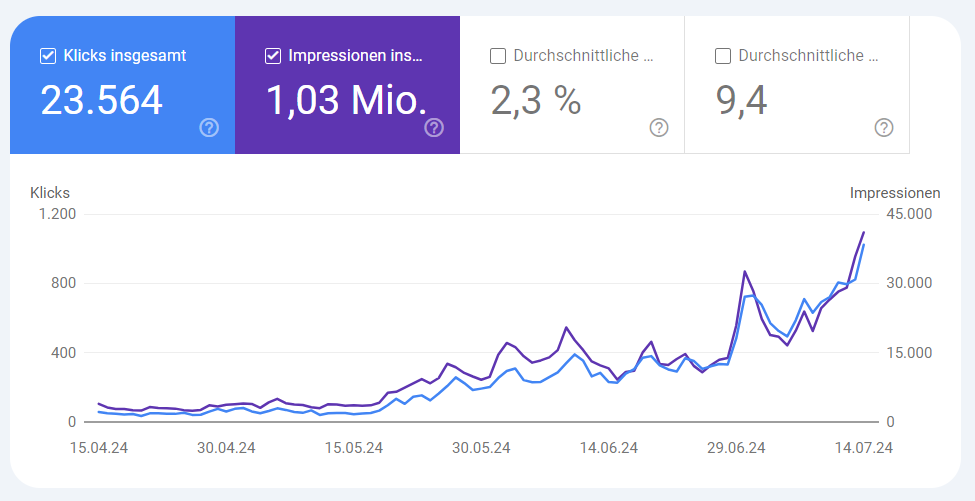

The last point in particular resolved the bottleneck in one of our client projects. In June, the issues surrounding Core Web Vitals were addressed in this project, and at the beginning of July, the server was switched with a proper upgrade to a modern setup and good performance. Suddenly, everything took a significant upward turn.

Despite all efforts, some pages may have difficulties with crawlability. Here are some common issues and possible solutions:

Faulty robots.txt file

Problem: A misconfigured robots.txt file can prevent search engine crawlers from crawling important pages. Solution: Check the robots.txt file to ensure that no relevant pages are inadvertently excluded. Use Google’s Robots.txt Tester tool.

Missing or incomplete XML sitemaps

Problem: Without a proper XML sitemap or with a faulty one, it can be difficult for search engines to discover all pages of a website. See example screenshot above. Solution: Create and submit a complete XML sitemap to the Google Search Console. And ensure the verification of its accuracy. Update this regularly.

Deeply nested pages

Problem: Pages that are many clicks away from the homepage may not be crawled. Also see the screenshot above from Audisto. Most pages were at level 6 to 8. Solution: Optimize internal linking to ensure that all important pages are accessible within a few clicks.

Duplicate Content

Problem: Duplicate content can cause search engines to have difficulty identifying the most relevant version of a page. Solution: Use canonical tags to indicate the main version of a page and avoid duplicate content.

Missing or incorrect redirects

Problem: Broken links and misdirected redirects can hinder the crawl process. Solution: Use 301 redirects for permanently moved content and avoid 302 redirects for permanent changes. Regularly check for broken links.

Excessive parameters in URLs

Problem: URLs with many parameters can be difficult for search engines to crawl. This is often the case with shop pages that, for example, provide variants (in weight, size, color, etc.) for products through parameters. Solution: Use URL parameters only when absolutely necessary, and structure URLs to be as simple and readable as possible.

Server issues

Problem: Server errors like 5xx errors can prevent search engine crawlers from accessing the pages. Solution: Monitor server performance and promptly fix any errors that occur. Ensure that your server has high availability.

Conclusion on Crawlability and Crawl Budget

The crawl budget is a factor you should be aware of for the efficient indexing and visibility of a website in search engines. By optimizing crawlability and effectively managing the crawl budget, you as a website operator can ensure that your most important pages are regularly crawled and indexed. This leads to better visibility in search engines and ultimately to more organic traffic and an improved user experience.

Server response times and the performance of a website play a crucial role in the efficient use of crawl budget. Slow server response times and a poorly optimized website can make the crawl budget inefficient and reduce the number of crawled pages. By optimizing loading speed, implementing caching strategies, and reducing HTTP requests, the performance of the website can be improved. This leads to faster server response times, a more efficient use of the crawl budget, and ultimately, better visibility and user experience.

Good crawlability is the foundation for successful indexing and visibility in search engines. By implementing the measures described, it can be ensured that search engine crawlers can find and index all important pages of a website. This not only improves the ranking in search results but also enhances the user experience and overall performance of the website.

To conclude with the words of Google itself: A faster website is more user-friendly and simultaneously allows for a higher crawling frequency. For the Googlebot, a fast website is a sign of well-functioning servers. This enables it to fetch more content through the same number of connections.

Do you have a lot of pages on your website but too many are not crawled or indexed? Contact us. We can help you!